Rendering Moving Pictures is Hard

It seems that Chris Sells thinks that animation is simple. He reckons it's just:

"changing an object's value over a range in a given time"

That's certainly a prerequisite, but I'd say there's a little more to it than that. Or rather, if you want things to look any good, you need to do more.

The real trick to getting moving pictures to look good lies in how you present them to the viewer. When rendering moving pictures of any kind, we usually want the user to perceive continuous smooth movement. This is hard because none of the widely-used motion picture technologies are able to present real continuous movement. They can all only show still images, they just create the illusion of motion by showing lots of still images in quick succession. If you do that right, it fools our eyes and we perceive it as motion.

In fact we are pretty easily deceived, so you can do a pretty shabby job of it and still fool the eye. However, while half-baked animation still looks like moving stuff, it's easy to tell the difference between that and high quality moving pictures. It's perfectly possible to play a FPS game like Doom 3 with a frame rate of 15 frames per second, but it doesn't look anything like as good as a frame rate of 85 per second.

Quality matters. This is one of the reasons you hear frame rates for games quoted a lot in graphics card benchmarks. However, there's more to quality than the frame rate.

Aliasing

There's a problem that crops up all the time when trying to reproduce (or fake up) real-life phenomena with computers. It's something that hits you whenever you try to represent something continuous using lots of discrete chunks. (E.g. representing pictures as lots of little pixels; storing sounds by taking lots of little samples; faking movement with lots of still pictures.)

The process of trying to represent continuous things in discrete chunks inevitably distorts the information. This distortion introduces ambiguities into the sound or pictures, and we sometimes see the wrong thing - we see 'aliases'.

An example you're likely to be familiar with is that of a rotating wheel on a television or cinema screen. Wheels on film or video sometimes seem to rotate in the wrong direction, or at a speed that looks plain wrong. This is particularly pronounced if you're looking at an old-fashioned wheel on a carriage or wagon, because the more spokes a wheel has, the easier it is for this problem to occur.

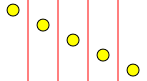

So why does the motion of wheels end up looking wrong? Well consider what happens when we try to represent the motion of a wheel as a series of still images or 'frames'. This image shows three such frames:

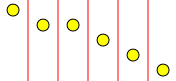

So far, so good. But look at what happens if we rotate the wheel a little more quickly. In the previous example, the wheel was being rotate by 7.5 degrees in each frame. But in this example, it is being moved by 42 degrees each time:

If you focus your attention on the small white dot, you can see that the rotation is still clockwise, and in fairly large increments. But if you just focus on the spokes of the wheel, it looks more like it is being rotated anticlockwise in very small steps. The rotational symmetry in the spokes of the wheel means that if it weren't for that little white marker dot, a clockwise rotation of 42 degrees would look identical to an anti-clockwise rotation of 3 degrees.

This is an example of 'aliasing' - the sequence of images that represent a wheel rotating quickly in one direction happen to look identical to the sequence of images for the same wheel rotating slowly in the opposite direction. When you get an ambiguity like this your eyes will usually tend to prefer one of the aliases over the other, and in this particular case, most people are predisposed to see the wrong one.

There are many such aliases we could create. If the rotation happens to occur at exactly the right speed (45 degrees per frame for this particular example) the wheel will appear to stand perfectly still. If it's very slightly faster, the wheel will appear to rotate in the correct direction, but far too slowly.

Anti-Aliasing Motion

This aliasing problem tends to occur with any conversion of a continuous input to a discrete representation. For bitmaps, aliasing occurs when the level of detail is too fine for the available resolution. With sound, it occurs when the frequencies approach the limits of what the sound format can support. And with motion, it occurs once things start moving too quickly for your frame rate to cope. (And in all cases, the effect is accentuated when input frequencies are close to the sampling rate - in the previous example, I deliberately made the wheel rotate at a rate where the spokes would hit the same positions about once per frame.) Fortunately there are techniques available to mitigate these problems.

Aliasing only occurs because the detail you are trying to represent exceeds the capabilities of your representation. The solution is to avoid trying to represent the relevant detail. For sound recording, this is done by filtering out high frequencies before trying to sample the sound. With images, it is done by smoothing things out a bit (e.g. font smoothing), reducing the detail in order to avoid aliasing artifacts. And with moving pictures, we use 'motion blur'.

With motion blur, instead of making each image in the sequence a sharp snapshot of where the items are at a given instant in time, each frame is an average over the whole interval between one frame and the next. So in the first example, each spoke would be represented as a grey blurred bar over the 7.5 degree angle that they sweep across in that frame. (I don't have the tools to hand to produce such an image right now, but imagine, if you will, a version of the first image where each of the spokes is slightly thicker and a bit blurred at the edges.) In the second image, the spokes are sweeping across a whole 42 degrees from one frame to the next. Since that covers most of the interval betwen the spokes, you'd just see a big grey blur, rather than seeing the individual spokes.

This would eliminate the aliasing - the wheel would no longer look like it was moving backwards. You'd just see a blur, which is what you see in real life when looking at a fast-moving wheel.

The problem with motion blur is that it makes the frames a lot more expensive to render than simple shapshots, and isn't even very straightforward to do. You can sort of approximate it by generating a much higher frame rate and then averaging the results, but this just pushes the problem into the realm of higher frequencies rather than eliminating it entirely. To do it properly you effectively need to perform integration of pixel values over the relevant time frame, which is, in general, a bit tricky.

However, I'm not about to ask for motion blur in Avalon. I don't regard this kind of motion artifact as a big problem - we're all used to seeing it on television and cinema screens, and for the most part it's not all that intrusive, except for certain pathological cases like period dramas with lots of highly-spoked vehicles... Besides, there's a much more egregious problem that I'm much more eager to see fixed.

Presentation-Induced Aliasing

The problems discussed so far are endemic to all forms of video and film, and are regarded as acceptable (if slightly annoying) in professional quality production. However, there is another potential source of aliasing artifacts - the presentation pipeline itself. This tends to produce much more visible problems, which are not regarded as acceptable for typical broadcast quality images. Indeed, quite a lot of money gets spent on the necessary equipment required to avoid these problems for television broadcasts. (And, for all I know, film production, but I've never worked in the film industry so I wouldn't know.)

How can your presentation pipeline introduce extra aliasing artifacts? A common way is when there is more than one point at which sampling occurs. Remember, sampling is the act of chopping your input up into discrete lumps - and in the case of film or video (or computerised animation), this means creating a stream of frames. But why on earth would you do that more than once? Surely we create the frames at the point where we go from a continous representation to discrete one. This would usually happen inside the camera - the purpose of a film or video camera is to convert continuous input (whatever moving scene the camera is point at) into a series of frames. The camera is at the start of the pipeline, meaning that everything between the camera and the screen is dealing with frames - why would you need a second stage that converts stuff to frames when you're already dealing with frame?

Well consider what happens if you watch moving pictures originally produced in another country? For UK readers, maybe you're watching an episode of Seinfeld. For US readers maybe you're watching an episode of Monty Python's Flying Circus. (For readers in other locales, pick whichever of the above was made in the television format your country does not use...) Both of these examples present a problem, for a simple reason: not everyone uses the same frame rate.

There are an astonishing number of different video standards, but the majority derive more or less directly from one of two standard, NTSC or PAL. (Unless you're French, in which case you are special. But you knew that already.) There are numerous details that make these two standards different, but the single most important feature for this discussion is that NTSC video contains 60 fields for every second of video while PAL contains just 50. PAL exchanges a slightly lower frame rate for better resolution. And the original reason for this is that these field rates match the frequency of the AC that comes out of power sockets in the countries in which these two standards were invented. (Also I'm not going to go into detail on the distinction between fields and frames, but for those of you who thought the respective frame rates were actually 30 and 25, yes, technically that's true, but any normal video camera actually takes one snapshot per field not per frame, so the number of distinct sampled images used to represent motion is determined by the field rate, which is double the nominal frame rate.)

So if you are watching a British program in the US, then in any given second, your television will be expecting to receive 60 fields because it'll be expecting NTSC video. But because the program was recorded on British equipment, it will be a PAL recording and so there will only be 50 fields of video for each second. So if you want to watch the program at its natural speed, this means that an extra 10 fields will have to be found from somewhere to pad it out. And if I'm watching a US program in the UK, there will be 10 fields too many, so we'll need to work out a way of paring that down to the 50 my TV is expecting. (And we'll also have to work out what to do with all that extra resolution that's available on UK video feeds that a US television won't be able to make use of, and likewise, how to fill in all the extra resolution that a UK television expects, but which won't be present in the US video source. This is basically the same problem as the field rate mismatch problem - these are just spatial and temporal versions of the same underlying problem.)

The process of dealing with these mismatches is called "frame rate pull-up" (or pull-down). The obvious solution is just to play the video back at the wrong speed... Play the UK source at 60 fields per second even though it was recorded at 50. However, not only does this change the playback time, it has a noticeable affect on the pitch of people's voices. For mismatches of a few percent, you can get away with this, but from 50 to 60 is just too big, and you have to do something different.

The obvious solution most people come up with at this point is to simply duplicate or drop frames. Play out every 5th frame twice, and you've converted your 50fps source to a 60fps source. Drop every 6th frame, and you've gone from 60fps to 50fps. Unfortunately this doesn't work very well because it wreaks havoc with the quality of the movement.

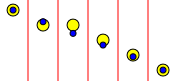

How does dropping/duplicating frames damage the quality? Well consider a simple animation where a dot moves down the field of view - here are 5 (rather tall and narrow) frames from such an animation:

Notice how the ball moves down by an equal amount in each frame - with all the frames laid out side by side here, they form a straight line. If the frames are played out at a constant rate, this will make it look like the ball is moving down at a constant speed. We will perceive this as smooth movement. If the display is a strobing light source (e.g. a CRT), the ball will flash on briefly in each of the positions in turn, and if our eyes track this movement, this results in the image of the ball appearing on the same place on our retina each time, which is exactly what we'd see if our eye were tracking the movement of a real ball. On a continuously-lit display (such as a flat panel screen), the ball will look more blurred - as our eyes move to track the movement, the ball remains illuminated all the time, so it will actually smear across an arc. The size of the smear will depend on the frequency with which we present new images - a higher frame rate means less blur, and a better-looking moving image.

(Actually, the response characteristics of all the various display types is a bit more complex than simple on/off or instantaneous flashes, so in practice what we see is a little more complex, but that's a topic for another time.)

So what happens if we want to add an extra frame? This is exactly what we would need to do if this were a 50 fps input and we needed to pull the frame rate up to 60fps. Here's what happens if we duplicate one of the frames:

Notice that we now have a rather obvious kink in the line, when we look at the frames side by side. When the frames are played out as an animation, this kink translates into a discontinuity in the movement. Exactly how we perceive this kink will depend on the frame rate. For a very slow frame rate such as 10 frames a second, the ball will appear to halt briefly as it falls. If we are playing out at a video-like rate of 60 frames per second, the stop will be so brief that it won't look like the ball comes to a halt. However, that's not to say that we won't be able to perceive the kink. It will be visible as a slight jiggling of the ball as it moves - a lack of smoothness in the motion.

To understand why this is, consider what would happen if the animation pattern shown were extended to last a a little longer - imagine the sequence were 30 times longer. (This would be long enough to last three seconds, easily time for your eyes to focus on and follow the moving object..) As your eyes track the ball, they will move continuously down the screen, matching the average speed with which the ball moves. (Our eyes expect to see objects moving fairly smoothly, so they won't track instantaneous stops and starts.) This image shows the same animation sequence, with the point at which the eye would be looking on each frame overlayed as a blue dot:

Notice how relative to where the eye is looking, the ball starts to fall and then leaps up before falling back down to catch up again. And this is exactly what the eye will perceive - the ball will wiggle up and down above and below where the eye is tracking, rather than seeming to move smoothly.

This periodic jumping is a problem you will always get when repeating frames in order to change animation rates. The frequency of the jiggling is determined by the difference in frequency between the two frame rates. So in this case, going from 50 to 60 frames per second results in a 10Hz jiggle. That's actually fairly fast, but easily discernable as a shuddering in the motion. For larger differentials, the shuddering may be so fast that it is perceived as blur. But this shuddering looks really bad for more closely-matched (but not quite exactly matched) frame rates. For example, going from 61Hz to 60Hz will cause a 1Hz shudder, in other words one jump every second. It will look as though the motion has slipped slightly once a second. (That's why this motion artifact is sometimes called cogging - it looks a bit like the motion of cogs when a tooth is missing!)

The irony is that getting it almost but not quite right turns out to be much worse than getting it wrong by a large margin - occasional 'stepping' or 'cogging' once or twice a second is much more distracting than a fast shudder or blur. But of course it would be much better if we could avoid the problem altogether.

(Aside: In case you're curious, here's how television networks adapt between 60Hz and 50Hz feeds. Some networks just use frame repetition/dropping. However, higher quality television networks use fairly sophisticated equipment which analyzes the movement on screen, and attempts to deduce what frames a camera that was running at the target framerate would have recorded. With such a system, pretty much everything you see is a fiction - something the frame rate converter thinks is a pretty good approximation to the original moving image. And most of the time it works pretty well - a lot better than simply dropping or duplicating frames. Equipment exists that can do this in real time. However, the kind of image processing grunt required to do this in real time is beyond the capabilities of a PC, so it'll be a few years before this kind of technique becomes viable on the desktop. Not that many years though - special-purpose hardware for this is already sufficiently cheap that it crops up in consumer electronics. I have a 100Hz television, and it is able to do this kind of frame rate interpolation to map 50Hz video onto 100Hz output. In fact it can do better than that - it can even take 24Hz or 25Hz input (those are the usual frame rates for film) and turn it back into convincing-looking 100Hz output. So if Moore's law keeps going, it probably won't be that long before a generic CPU can do this trick without requiring special purpose hardware.)

So presumably we just need to avoid ever trying to convert from one frame rate to another? What could be simpler? Sadly, there's a snag.

Frame Rates and Monitors

The big problem with PCs is that the graphics card is usually in charge of the frame rate. Want to watch some 60 frames per second video footage? Tough - your graphics card has been configured for a 75Hz refresh rate, so everything's going to look a bit rough.

Even if you configure your monitor to refresh at some convenient multiple of the frame rate you require there's still a problem - the clock on the graphics card that works out how often to refresh the display runs independently of the computer's internal clock. Any clocks that are not somehow synchronized with one another will inevitably tend to drift gradually. Television solves this problem by refreshing the screen at whatever rate the broadcast network says; televisions don't generate that clock locally, they simply recover the clock from the broadcast signal, meaning that they never drift away. (But this doesn't mean that the network can just switch between 50Hz and 60Hz broadcasts to solve the frame rate problems with imported programs. Televisions tend to have a limited range of frequencies over which they work properly, so lots of viewers would just get a broken up picture. Modern TVs can cope because they're usually designed to work in all markets, but older ones usually can't.) With a computer, the graphics card can rarely be instructed to use an external clock source. (Actually a few graphics cards can do this, and I'm told that media center PCs mandate this facility from the graphics card, so this might start to become more common.)

So with a computer, your only option is typically to try and make the source of frames work at the same speed as your monitor. For a video feed of any kind this isn't going to work very well - you could watch your 60Hz video at an 85Hz refresh rate, but it's going to look like Benny Hill, and sound like the Chipmunks. The only situations in which you really have this option is if your screen supports a frame rate which is pretty close to what you require (or a multiple of your source frame rate), or in situations where the source frame rate is negotiable.

When would the frame rate be negotiable? When frames are being generated from scratch, which is exactly what happens

in most games, and in most graphics systems that support animation, such as Avalon or Flash. In these systems, the underlying

representation is essentially scalable and that's something that can work for you in time as well as space. So not only do

scalable graphics mean that you can render images at any resolution, it also, in principal, means that you can render at any

frame rate. In Avalon, animations source objects like DoubleAnimation don't get to decide the frame rate. They

are required to be able to supply values for any point in the 'timeline' of animation. Avalon gets to decide when the frames are

going to appear in this timeline. So in principal, Avalon should be able to generate frames at exactly the interval required by the

monitor. In practice, I'm seeing juddery motion with the current preview release, so I'm guessing they're not doing this yet. I'm also

seeing tearing, which suggests that the presentation is simply not properly synchronized with the monitor.

Tearing

Tearing is a different kind of motion artifact from aliasing. It occurs if new frames are delivered into the frame buffer at the wrong moment. (The frame buffer is the memory on the video card containing the image on screen.) The effect is most visible in objects with clearly-defined vertical sides that are moving horizontally. When tearing occurs, one or more discontinuities occurs in these vertical sides - the object appears to have been torn. It looks something like this:

To understand how tearing occurs, consider how pictures are delivered from the graphics card to the screen. The entire image cannot be sent in one go - there aren't enough wires in a video cable to do that. The image is instead sent one pixel at a time. The video card starts at the top left, delivering the first line of pixels and then moves onto the next line and so on. When it gets to the end, it starts again from the top.

Imagine what happens if a blue square is being animated from left to right across the screen. This will be done by drawing a series of frames into the video card's frame buffer, each with the blue sqare slightly further to the right than before. Suppose that one of these new frames gets written to the frame buffer when the video card happened to be half way through sending the relevant section of the frame buffer to the screen. It will have sent the monitor the first few scan lines with the blue block in one position, but after the update, the remaining scan lines will be sent with the block further to the right. The image that appears on screen will look like the one above.

If you're really unlucky, you can end up with this kind of thing happening repeatedly frame after frame. (This can happen if you manage to get your frame buffer updates almost but not quite in sync with the monitor.) In this case, the tearing persists for as long as the object is moving. In less pathological cases, the tearing is intermittent, but it's still enough to be disturbing.

With the following XAML, I see fairly obvious tearing artifacts flickering up and down the edges of the blue block:

<Window x:Class="LinearAnimate.Window1" xmlns:x="Definition"

xmlns="http://schemas.microsoft.com/2003/xaml"

Text="LinearAnimate" Height="600" Width="800">

<Canvas>

<Rectangle Fill="Blue" RectangleWidth="30"

RectangleHeight="30" RectangleTop="10">

<Rectangle.RectangleLeft>

<LengthAnimation From="0" To="600" Duration="5"

RepeatDuration="Indefinite" />

</Rectangle.RectangleLeft>

</Rectangle>

</Canvas>

</Window>

This suggests that the updates are not being delivered to the frame buffer in a way that is synchronized with the monitor. The movement is also a bit lumpy on my system, which would also be consistent with unsynchronized updates. My CPU's load is very low when this is running, so I don't think it's just a case of my system not coping. (Although since there's no way to monitor the load on the graphics processor, it's hard to be sure - it might just be that my CPU is idle but the graphics card is maxed out. Although I'd be surprised if drawing a single blue block would max out the GPU, given that I've seen this machine cope admirably with much more complex graphics.)

These kinds of problems have always been rife in computer video and animation. The broadcast video engineer in me has long been disappointed at the low quality of moving pictures achieved by desktop computers. (And not just PCs by the way.) I'm hoping that the fact that these problems are present today is simply because this is an early preview of Avalon. I very much hope that by the time Avalon ships, we'll see better solutions to these issues. Specifically, I want to see updates to frame buffers synchronized with the monitor to avoid tearing, and I want the animation frame rate to be matched exactly to the monitor to avoid presentation-induced motion aliasing artifacts.